UKG Autotime: Realtime Reporting and Analytics

The range of reporting and analytics that you need from UKG Autotime is broad enough to cause fits. On the one hand, you need real-time reporting to manage operations. You need to know who showed up for work in order to make sure you have the staff to get the work done today. Seeing this information tomorrow morning won’t do you any good. On the other hand, you need more sophisticated analytics in order to manage performance and improve operations over time.

In the past, this often meant compromise. Operational reporting and analytics were separate and disconnected. Reporting performance was lousy, and you were unable to get to the level of detail you needed to understand what was happening with confidence to make changes.

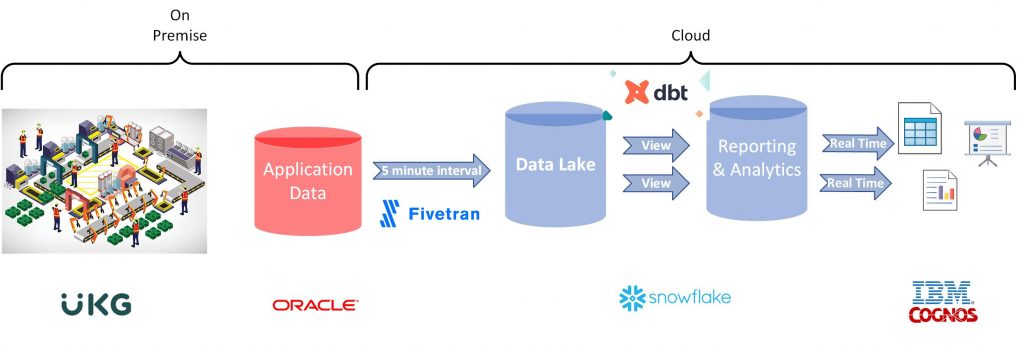

Here is how I solved these problems with just a few simple tools and a different approach.

First, I “decoded” the Autotime metadata to understand the relationships between tables and fields in the operational database. This provided the knowledge and understanding of the source data needed to identify which tables I needed, and to create a metadata model and map data from the source to reports.

Next, I connected FiveTran’s HVA connector to the operational database to synchronize the tables from the source database to the data lake in SnowFlake with a 5 minute interval. This provided a reliable data pipeline from the source system to Snowflake, without contention on the source system. Whether you use the same connector I used, HVR or the classic FiveTran connector for your database, the process of set-up and configuration is pretty easy and reliable.

Then, using the AutoTime metadata as a guide, I created a series of data models using dbt to deliver reporting and analytical models using runtime views. Because the dbt models are configured as views,all latency is eliminated, while providing bomb-proof reliability. Since there are no ETL jobs to run, there is nothing to fail, and nothing to support. Plus, the run time views reflect the source data immediately – there is no latency beyond the FiveTran synchronization. In this case, dbt provided a very simple way to create data models, while SnowFlake provides the high performing data platform for transformation, reporting and analytics in near real time.

Finally, I developed reports and dashboards, augmented with machine learning and AI, using IBM Cognos. Both operational reporting and advanced analytics are built on the same metadata layer, which is based on the operational data. This means we can navigate from the most advanced analytics, all the way down to the lowest level of detail, providing the level of data and confidence needed to manage and improve operations. And all of it, even the analytics, are essentially in real time.

Here is a look at how the solution fits together.

This solution is far more reliable than traditional on premise ETL loading a data warehouse operation, for a variety of reasons.

First, separating the extract and load pipelines using FiveTran insulates the solution from schema changes in the source system, and automatically recovers interruptions, before it has any impact, and usually before anyone even notices. This also provides all of the change data capture logic, so the process of keeping the reporting database in sync is reliable and handled.

Second, using run-time views on a high-performing platform like Snowflake, eliminates the need to run ETL as a scheduled job. The ETL doesn’t fail, because there are no ETL jobs running that can fail. In fact, we only need to re-run the models if we want to make a change. There is no batch window, no data latency, and the risk of ETL failure is eliminated. There is no scheduling, triggering, orchestrating, or any dependencies to manage, restart, or reload. This is one of those situations where we get so much more, while doing so much less. Makes me feel guilty!

Finally, IBM Cognos is built on a metadata layer that covers both operational reporting, ad hoc data exploration and advanced analytics. The metadata layer is easy to develop and maintain, and coupled with Snowflake, Cognos provides reporting, exploration and advanced analytics capabilities that perform well with very little or no maintenance or tuning. And because there is only one metadata layer, we have the single version of the truth.

All of these things are running in the cloud with 99.99% uptime. Even more, backup and recovery, and failover and software updates are managed by someone else, and easy to operate if needed. It’s a bit ironic that the source system is less reliable and more difficult to manage than all of these dependent parts, while operating a critical business process.

One thing to note – I can’t emphasize enough how easy this is in hindsight.

The integration with both Oracle and Snowflake made the data pipeline with FiveTran very easy to set up, and reliable. This saved many hours of ETL development upfront, and many hours of support, and maintenance in the future. Snowflake has also performed exceptionally well, providing impressive performance at a low cost, with features that make both the ETL/ELT processes and reporting work better. This has made both dbt and Cognos work like we always wish they would, and sometimes need them to perform in order to deliver in real time.

This has enabled us to shift workloads – for example, to perform more transformations with dbt, in order to to make report development easier, improve content and perform faster, with far better reliability.

If you have done similar work, I would love to connect with you and compare notes. I looked at many options in crafting this solution, and would be happy to share my experience. FiveTran, Snowflake, dbt and Cognos work very well together. This is one of those projects that gets me excited about analytics again.

EmL – elealos@quantifiedmechanix.com