Here is a framework and an approach centered on a different approach to defining requirements that works.

Most importantly, a successful analytics project is a partnership. The business users should want to help IT create an effective and robust solution and IT should want to create a solution that delivers exceptional business value. Make sure you have the right people working on it. A junior business analyst that doesn’t understand the business processes or objectives involved is not going to make things easier. This is also true for the technical people, who need to be able to meet business users where they are, with a basic grasp of business concepts and operations. Communication is challenging, and if the team is not working for each other this can be a fatal flaw to any project because one of the foundational principles is to understand the objective of the project/solution and the value it should provide from a business perspective.

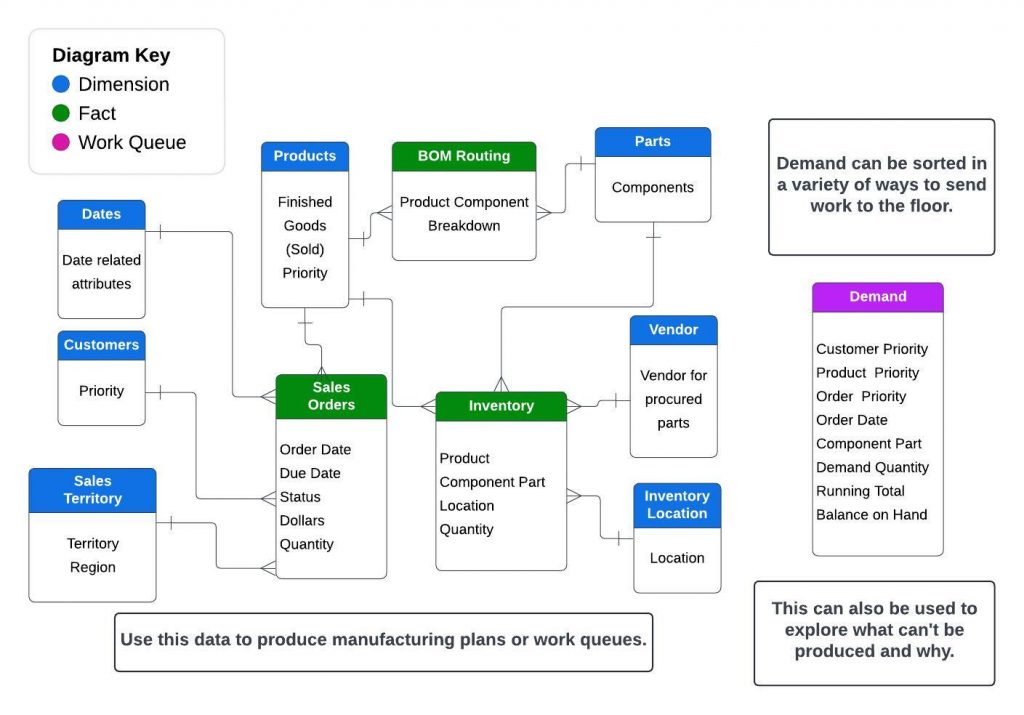

I am going to use an example from a past project that went well. The objective of the project was to create automated production plans for a manufacturing cell that produced a variety of pumps. In order to do this, we needed to collect orders for the pumps, and enrich the data slightly in order to assign priority to each order. Priority was based on a mix of product, customer and order related information. For example, certain customers had priority, then key products, otherwise it was based on age of order or total order value. But the plan also needed to take inventory into consideration. If the parts needed to assemble the finished product are not available, it should be excluded. Or if the finished product was already in inventory it might also be excluded.

The value of this project was to improve the production of pumps so that production was aligned with strategic business and operational goals. That essentially means making sure the cell was working on the right orders. The existing planning process was labor intensive, time consuming, and error prone. As a result, the cell often worked on the wrong things and production and efficiency suffered.

With this understanding of business value, objectives and goals, the next step is to investigate and develop more detailed requirements with users. We think we do this differently, more effectively than anyone else and it is a significant reason for our success. In too many cases, we see others collecting and documenting requirements using a business analyst who meets with and interviews stakeholders, but has a limited understanding of what a solution might look like, and possibly even a limited understanding of the business problem. As a result, the analyst essentially documents the current process. Meanwhile, gathering and documenting requirements takes a long time, there is considerable back and forth with stakeholders to “sign off” and nothing has been done to test and understand feasibility, the availability of different approaches, or the level of effort the different options require.

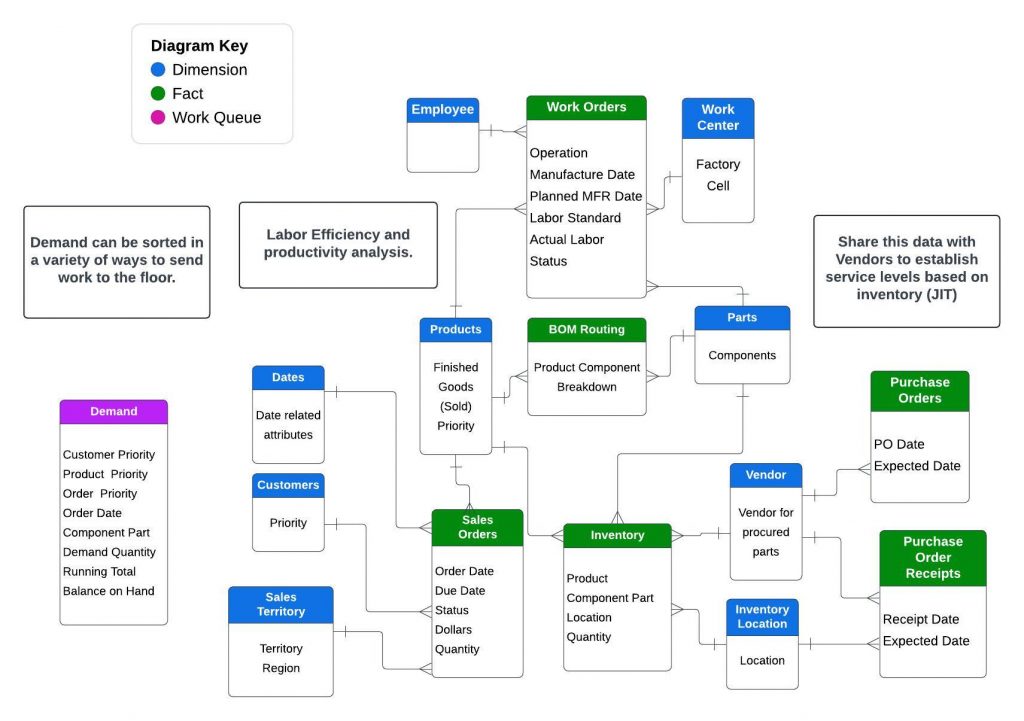

At this phase of a project we also start with stakeholders, but with an architect who has equal technical and business solution expertise. Our goal is to identify the data they are currently using and map it back to the core systems and processes that are creating the data. Often this is a mix of reports, in this case orders and inventory, along with other data sources providing BOM routings, product data and customer data. We then create a rough logical data model based on those processes and review it with stakeholders as part of the design phase. We intend to deliver a physical model based on this, but we are not asking for sign off, just confirmation we are reasonably aligned with solution design, and data. This takes a lot of pressure off this step.

In the current example we might have a logical model that looks like this.

The next step is to make a working prototype where we deliver this model and produce visuals, reports or relevant content. Creating working data models with dbt and Snowflake has very little friction and a roughed out version of this data model can be produced quickly. This process also uncovers any challenges or unforeseen engineering difficulties early in the process. These issues can be assigned to subject matter experts to solve while the project continues to make progress. Oftentimes these issues are easily resolved as the solution begins to take shape.

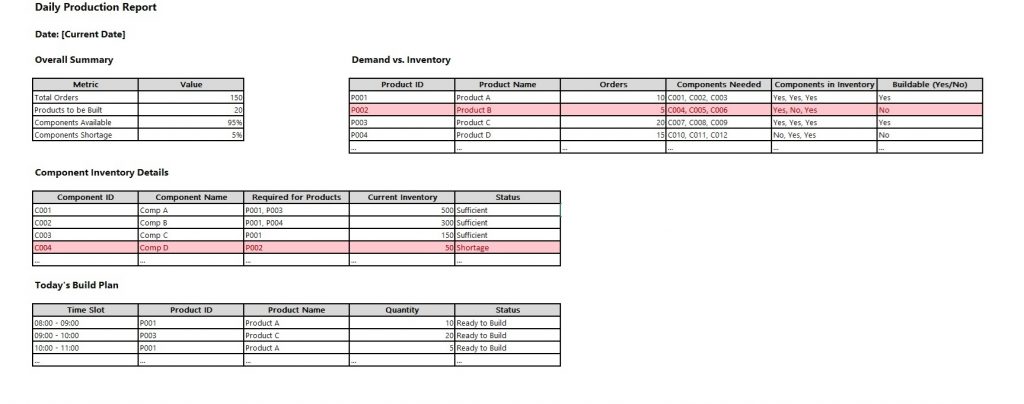

Creating content with this sort of model is easy with tools like Cognos Analytics, for example. The physical data model is easy to understand, performs well and supports a broad range of visuals, queries and calculations. The hardest part is limiting the prototype to something simple and letting the users drive the process of designing content. Here is an example of what a first draft prototype in this case might look like. Again, we did not want to deliver too much. We still want the users to drive design, and we are also trying to validate data.

Making these prototypes also exposed the things we needed to do to make interacting with the data user friendly, and make sure performance exceeded expectations. Without this approach we might have invested too much time into these tasks and still missed the mark. Where and how users would interact with the data is hard to imagine without actually doing it, but creating a rough MVP (minimum viable product), and putting it into “quiet production” and then working with the users to add functionality and evolve worked very well. Building this on Snowflake also helped, because it is essentially designed for these database workloads, and we can scale up or down to meet user expectations without much effort, cost or delay.

We usually use the existing spreadsheet process to validate the output of the new application. Essentially they run in parallel. There are often differences in the data at first, but this helps us clarify requirements efficiently because we started out with the right data, and the right approach confirmed earlier. We find this approach to requirements much more efficient than fully tearing apart the existing process. All too often there are steps in the old process that nobody understands or remembers. Sometimes the people involved have changed when people leave or change jobs and some of the “tribal knowledge” of the process disappears. Sometimes there is just “drift”. The sources change, or some other process changes without anyone understanding the implication, and the data is wrong.

We usually find errors in the original process we are replacing at this stage. Because we have delivered the foundation data we can usually trace back to the most granular level of detail and identify the issue very quickly and definitively. Good data engineering, tests and validation at the foundation level takes care of 85% or more of the data quality issues before they occur.

Because we are capable and confident with the tools, we do a lot of this work in working sessions with users, and this gives them a much better understanding of how we do our work, confidence in the data and ownership in the solution. On more than one occasion I have had a non technical user make a significant contribution to solution design because of this approach. And it is a benefit for the technical team to be part of the discussions with users on the business side. It helps guide and inform solution design, and even documentation within the models. When technical users understand the business and its goals, solution design is better. And immediate feedback is much more effective than something that happens days later.

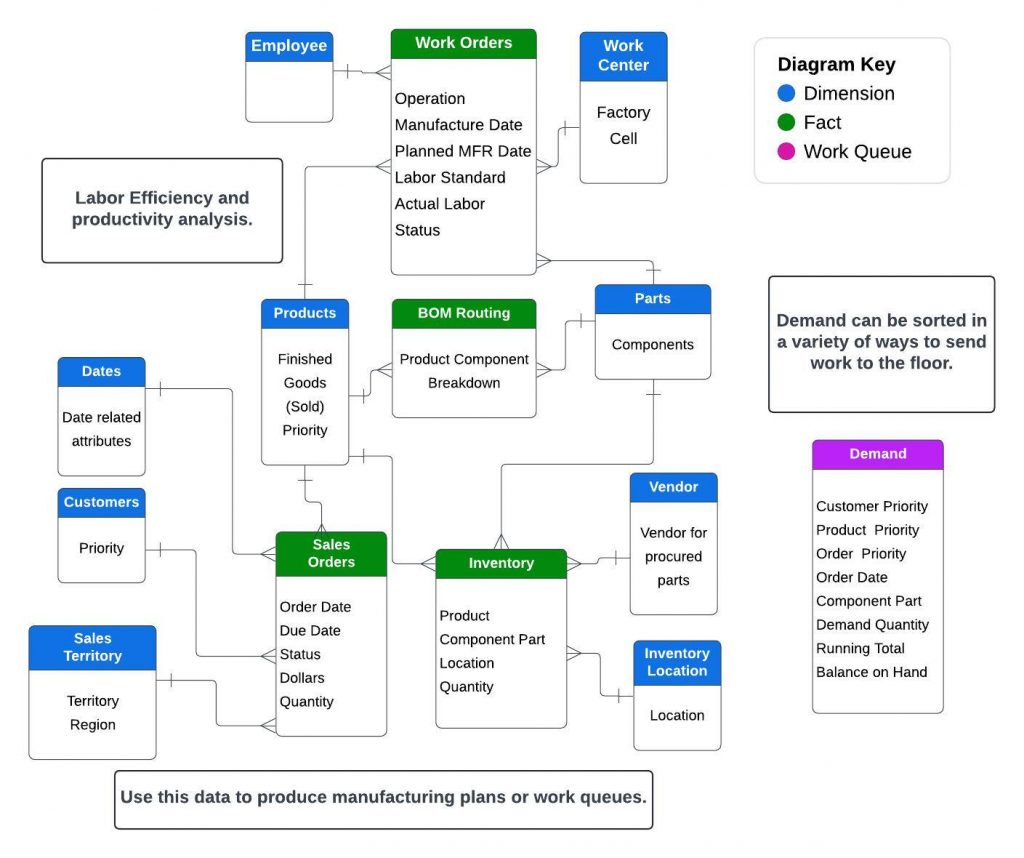

This phase of this solution evolved to include scenario planning with inputs for hypothetical orders or additional demand from safety stock, and grew to include labor efficiency and productivity. Because much of the foundation data had already been delivered, adding this data and capability was incremental.

This labor analytics capability ties directly back to the business value we set out to accomplish at the outset of the first project. The first phase was essentially about making the plans better using better data, to improve production. The second phase added mechanisms to do what-if analysis, in order to anticipate large orders and see their impact in the near future, in order to anticipate disruptions and act proactively. The most recent phase added labor analysis which helped identify causes of work disruptions like faulty components, helping managers identify issues and take action more quickly, which has improved performance and efficiency.

If it was up to me, I would add vendor analysis next to manage component inventory more effectively, and track and analyze defective components and timely deliveries, Here is how that model looks:

Creating a successful solution like this is a partnership between business users and IT. Both sides collaborate closely, with business users eager to help IT create an effective solution, and IT focused on delivering exceptional business value. It’s crucial to have the right people involved: knowledgeable business analysts who understand the business processes and objectives, and technical staff who can communicate effectively with business users. Clear communication and mutual support are foundational principles, ensuring the project’s objectives and value are well-understood from a business perspective.

This project is a good example of this approach in action. The project aim was to align production with operational goals. The existing manual planning process was labor-intensive and error-prone, leading to inefficiencies. By engaging both business users and IT, detailed requirements were developed collaboratively using the data and development processes to reach the goal. A knowledgeable architect helped map current data to core processes, creating a logical data model that evolved through rapid prototyping and user feedback based on real applications, data and use cases. Tools like dbt, Snowflake, and Cognos Analytics facilitated this process, enabling quick adjustments and ensuring the final solution was user-friendly and efficient.

Throughout the project, iterative development with continuous user involvement was effective. This approach not only clarified requirements efficiently but also built user trust and ownership. By validating outputs against existing processes and addressing data quality issues early, the project minimized risks and maximized alignment with business needs. Subsequent phases added capabilities like scenario planning and labor analytics, further enhancing the solution’s value with much less incremental effort. This iterative, collaborative methodology proves more effective than traditional methods, ensuring solutions are aligned with business goals and adaptable to evolving needs.

I’d like to hear your thoughts, send me a note.

elealos@quantifiedmechanix.com