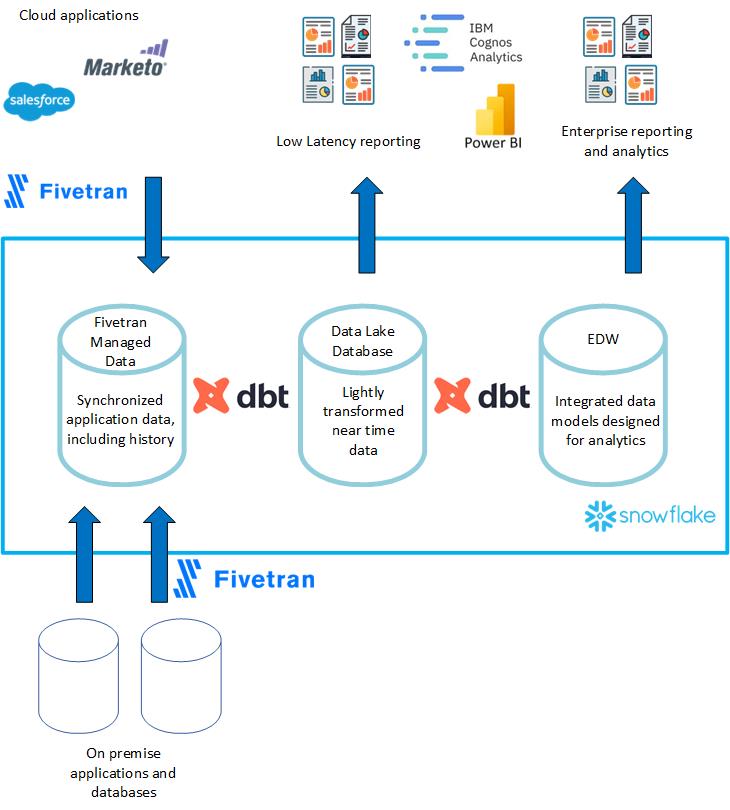

The following is an overview of the platform architecture used to facilitate the process of ingesting data into the Snowflake platform and transforming it into data models to facilitate a wide variety of reporting and analytics. I recently developed this with a client and felt that the general information would be useful to others going down the path of exploring and adopting tools and platforms like Snowflake, Fiveran and dbt.

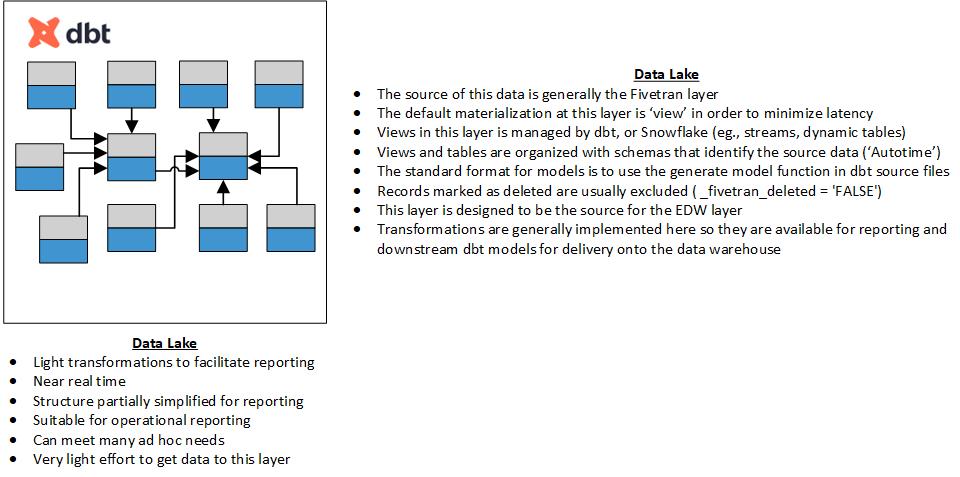

Fivetran manages the extract and load steps into a Fivetran managed database in Snowflake. We use dbt to deliver views to a “data lake” layer (Snowflake database) making light transformations, including removing deleted rows, and other simple in line transformations that do not require data from other sources. For example, we rename columns for clarity, or create summon groupings using if/then or decode logic. We have a semantic layer created in Cognos that supports multiple use cases (operational reporting, ad hoc access, data exploration). This is also the layer where some users in the line of business use Power BI, Tableau and other tools.

The data lake layer has very few moving parts. The dbt models are very fast (they are just views) and report performance is adequate with very limited resources (no additional storage, little or no compute during ETL). This layer is where low latency operational reporting happens because the data is refreshed in near-real-time based on FiveTran interval.

We use the data lake layer as the source for the enterprise data warehouse layer (EDW). The dbt models used to deliver this layer are performing integrations between applications through conformed dimensions, and performing other transformations to improve performance or reduce complexity to facilitate analytics and reporting. This requires creating and assigning surrogate keys (more detail regarding methods on this later).

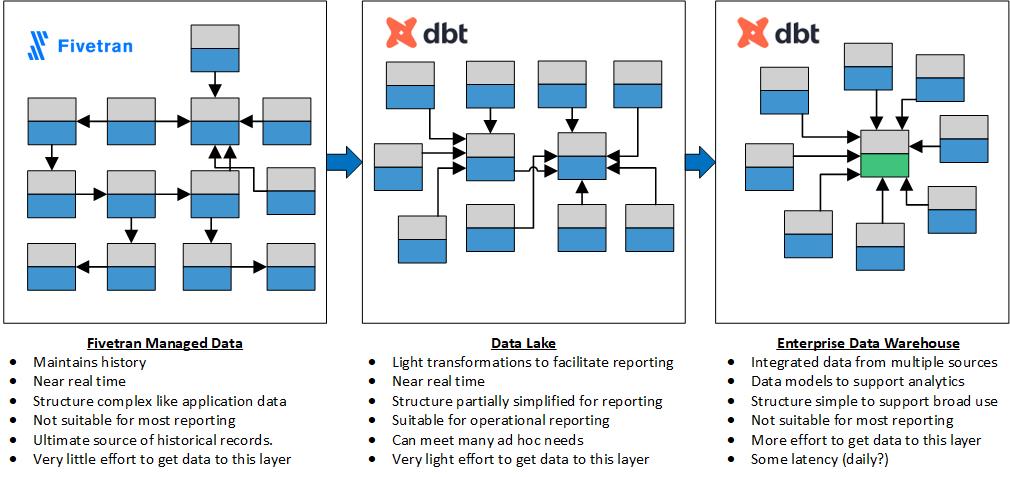

Here is an overview of the data flow through the platform, with summary characteristics of each layer:

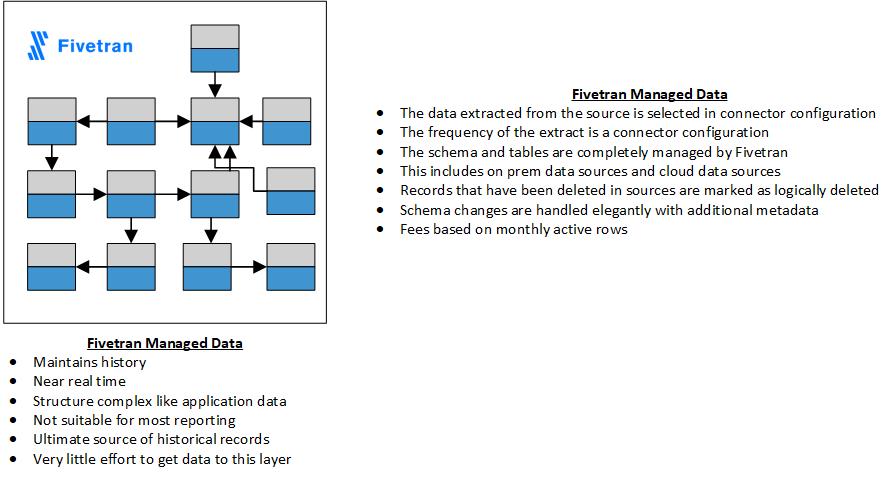

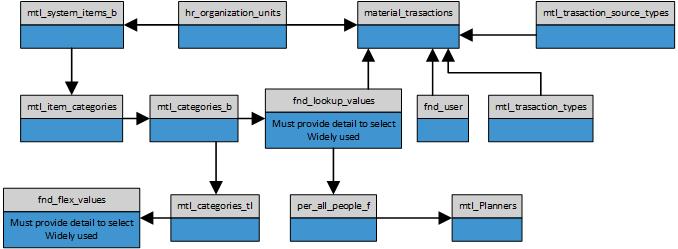

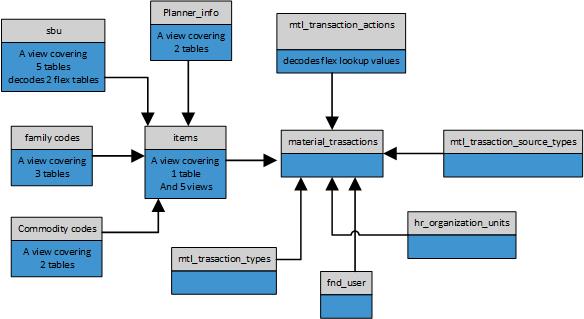

The entity relationship diagrams shown at each layer actually reflect the underlying data using the material transaction data, related ETL and reporting as an example. This illustrates the level of transformation performed at each level. The Fivetran managed data is essentially created and maintained by the Fivetran connectors. There is a financial cost associated with this layer, but there is very limited support and maintenance once connectors are configured and working.

The data structure at this level mirrors the source data. Fivetran does add several metadata columns to each table to manage and track schema changes and deleted records over time.

The data lake is the primary transformation layer, where transformations are implemented to facilitate operational reporting that requires near time data. Transformations are used by downstream dbt models that deliver the EDW layer, for example.

The data structure at the data lake layer has been transformed to make reporting easier. Lookup tables, complex tables and relationships have been simplified into views that make data easier for reporting. The views at this level will not perform as well as materialized tables, but they will provide low latency data (near time). Data from separate applications at this level might not be integrated, unless it has been integrated within the applications.

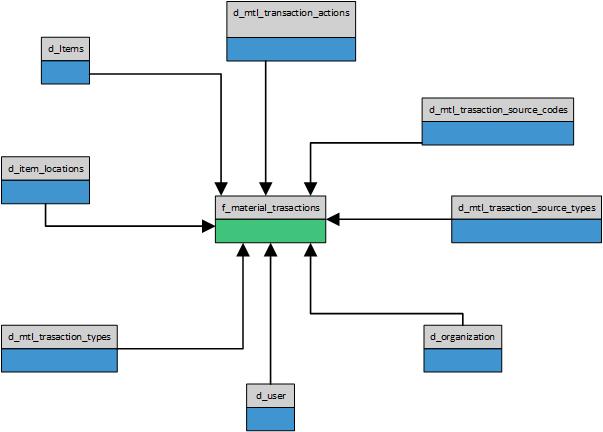

The EDW Layer makes use of the transformations implemented at the data lake layer, and performs any other transformations that simplify reporting or improve performance. The data at this layer has been further transformed to make reporting the easiest, and materialized as tables to maximize performance.

This EDW layer is well suited to serve well governed data to a mature reporting and analytics platforms like Cognos Analytics or Power BI. Because the data has been transformed and delivered into a dimensional model, basic transformation processes enforcing referential integrity have been applied. This reduces errors significantly. This structure also performs well, making it attractive for end users, and cost effective on a consumption based platform like Snowflake. This model is also user friendly, meaning a wider variety of users are able to create analytics content without much support. Platforms like Cognos Analytics take this one step further by providing a metadata model to the users that eliminates the chance of database level error completely.

I would love to hear your thoughts and questions, good or bad. Looking forward to it.

EmL

elealos@quantifiedmechanix.com